Finding leads for my Services Website- web scraping? Targeting business URL’s-Part 3

At the end of the last post I put a few steps for my process:

Part 1.Initially broad targeting. Harvesting personal emails with searches which include gmail.com etc.

Part 2. I need to find some Facility Management Directories and do a web crawl on some of those.

To do that I could use the Firefox add-in Web Scraper but it is slow and while it is running it locks up my browser.

Instead I used Outwit Hub (Free version) that you can find on their site Outwit.com. I had a look at this on my post here.

While looking for this link I see that there is also an Email Sourcer (free version) that you can download. I have just done so and will try it shortly.

I use the Outwit scraper as it allows me to continue to use my preferred Firefox browser while the scrape is proceeding in the background.

The video below was interesting in that the process was quite simple. The main tool he uses for checking emails is now a paid service.

https://youtu.be/wVcs7jaf8v4

The interesting takeaways from the video isn my opinion were:

- Free SEO Tools by SEO Weather for shortening URLs

- Google Guide for advanced search operators.

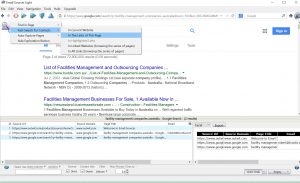

I initially used Outwit to search some Google Search pages like Facility Management Companies US and then set up a scraper to take the Company’s URL and text info from the URL’s listed in the Google search. As I’d set google search results to the maximum of 100 per page it would harvest 100 rows of data that could then be viewed,

Open Outwit Hub Lite and type in Google URL search.

Use browser to find Markers

View page source and find the items that you want. You want to scrape data that is structured on each search. The Outwit hub lit needs the Marker before and the marker after the data that you want to pull. Best to use a Browser and use Right click “inspect element” and it will show you the Marker before and the marker after that you can cut/paste into Outwit scraper that you are setting up

Put Marker codes into Scraper in Outwit

Scrape pages using Outwit and export

Then go to the google search page (if more than one, select first) then press the scraper button and execute and export to a CSV file. After that , change the google serch to next page and do again and carry on until all the pages are viewed, scraped & extracted to files. Then join all the files together.

Excel page with all the exports.

I then take each Company URL address and check the company URL in Chrome to see what emails occur.

Chrome using the Email Extractor plug-in

Using advanced search “allinurl: xxxxxxx.com.au” I look to see what emails are harvested & save them to a separate file.

Unfortunately, a lot of the emails are not viable, and nearly all the ones with names in were the erroneous ones. Those were the more specific ones I was interested in. So I decided to check a few of the Failed ones.

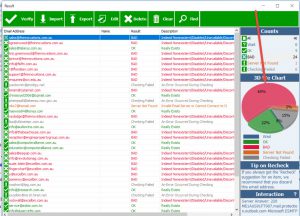

Validating the validator

I looked for some other email validator and found this post. I tried the first option Verify Email.org but it only does one email at a time, but it validated what Dr Email Verifier said, then I tried Mailtester and the online site will let you test one Email at a time but there was a trail download version that allowed you to check 20 emails and the results were:

So it looks like Dr Email Verifier is doing an OK job.

Of the emails I harvested with this process yesterday , of the 119 only 31 were valid so a 28% success and a lot of those were generic ones like “sales@….” or “info@..” etc. Of the valid people ones only 9, so an 7.5% success from that harvesting method. What I’m doing in the above process is not that effective. Actually, there were a couple of duds so only 7 went, so down to about 6%.

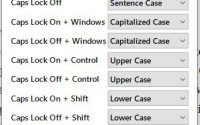

Because there were so few I could easily split out the first name into a separate column for the salutation. Then, as it was an email address it was all in lower case. To make the first letter go to upper case you use the formula =PROPER(A2) in Excel and it will make the first letter upper case. You then copy the column with the Upper Case letter at the front and paste VALUES onto the original lower case column.

Also not an elegant workflow, I’m hopping between programmes and excel sheets to get the work done. I’ll think about seeing if I could design a better scraper that would target emails. I’ll have to look into how to code that. I most probably have to go on to a company’s website and look for it there, although the markers may be different on each specific company’s pay, although the proceeding one could be something like “mailto”.

Email Sourcer light is a DUD

It runs nicely and crawls links to find emails, then it overwrites the emails in the free version so they are unusable. The pro version costs $69 US. Unfortunately it does not tell you that the free version is useless until you download and try it. A bit sneaky, not a marketing method I approve of, but the OutWit free web scraper is nice. That’s an hour gone wasting my time with that.

Banners on other sites.

One resource I had not got around to was to use my existing websites to tell visitors about the new site.

I used a simple header banner on my website when I was doing a MailChimp marketing exercise offering free lessons. I quite like the header banner, it is discrete and doesn’t annoy as much as pop up and dynamic banners. I used simple banner plugin for the site. One of the issues I have had in the past is getting the background colour to match other colours on the site, I have usually fiddled away with it and finally accepted a ‘near enough’ solution. This time I used an online colour picker, so easy, why didn’t I do this before? The site I used Color Picker Online which allows you to either use an image or link to a URL. I tried the initial method first and got a great match. Then I checked with the 2nd method and the colours selected wire slightly different. Definitely a great tool, this did save me a great deal of time.

Anyway, after getting the banner as I wanted it on one site, I used the same setup for my other sites. Hopefully this will interest visitors to one site to look at the other. Generally they are people who are interested in the topic so maybe this will get a bit of traffic to the site.

End thoughts

A days work for 7 email addresses!! When looked at from that perspective not an efficient use of time. This will need some mulling over.

There are a lot of paid email scrapers, eg the Outwit one, that for a years license is $45 US. In terms of investing time and energy into this maybe a tool like that is useful. The emails will still need to be validated. Maybe that is the method to use.But going through the process give you an understanding of what needs to be considered in the whole procedure. Also maybe there is, in looking at the process a loss of the end objective which is to drive viewers to the site, specifically viewers who are interested in the services and some who may consider using some of the services.

At this point in time I am cash poor but have time to explore solutions.I am learning a lot. At present though I’m not being that effective.

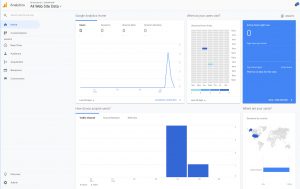

Here are the metrics of the site. Very sad 🙁

Still, as my father used to tell me, “there is only one way to go from here”. We’ll see what comes next.

Things that I’ve picked up that are useful (mainly in this part of the process as they are fresh in my memory):

- Free SEO Tools by SEO Weather for shortening URLs. The URL trimmer. This is a quick tool and I think will come in handy. Especially with the Outwit crawls.

- Google Guide for advanced search operators. This will hopefully extract more from fewer queries. I can only practice to see if they are effective.

- Outwit.com Outwit hub light. Definitely useful.

- Dr Email Verifier. Seems to be accurate and works on most of the company email addresses (so good apart from unsupported servers like yahoo) and this was checked with other validation tools.

- Color Picker Online was a good find for web stuff.

- The banner on my existing websites. Hopefully that will get some people to explore the DataIKnoW site.

- Thunderbird Mail Merge

Not so useful:

- Outwit Email sourcer light. Of no use. But the paid version looks as if it will be useful.

- I dont think the untargeted mailout is working so far. 58 + 124 = 182 emails and no response. I do need to have another look at the template. I think I need to have a couple of re-writes of it.

Another thing I think is relevant to this process is getting things out there working. As this is a passive site things need to percolate down and gain traction. That is my understanding of the process so far, so by sending stuff into hyperspace something has been tried, so you can move onto the next idea. The more attempts at different things will increase awareness of the site generally. One video I saw said 3-6 months for some sort of traction.

I seem to have that traction regarding OpenMaint on my sites as they come up quite high in the rankings on “OpenMaint Setup” and OpenMaint Configuration”. So I suppose time is a factor in the process

So the journey continues, onwards and upwards.

I think I need to revisit this part 2 of the process of finding company URL’s to scrape to find email addresses. That’s another post.